더보기

결과

결과

결과

결과

결과

결과

결과

# 필요한 모듈 import

import torch

import torch.nn as nn

import torch.optim as optim# 배치크기 * 채널(1: 그레이스케일, 3: 컬러) * 너비 * 높이

inputs = torch.Tensor(1, 1, 28, 28)

print(inputs.shape)

# 첫번째 Conv2D

conv1 = nn.Conv2d(in_channels=1, out_channels=32, kernel_size=3, padding="same")

out = conv1(inputs)

print(out.shape)

# 첫번째 MaxPool2D

pool = nn.MaxPool2d(kernel_size=2)

out = pool(out)

print(out.shape)

# 두번째 Conv2D

conv2 = nn.Conv2d(in_channels=32, out_channels=64, kernel_size=3, padding="same")

out = conv2(out)

print(out.shape)

# 두번째 MaxPool2D

pool = nn.MaxPool2d(kernel_size=2)

out = pool(out)

print(out.shape)

flatten = nn.Flatten()

out = flatten(out)

print(out.shape) # 64 * 7 * 7

fc = nn.Linear(3136, 10)

out = fc(out)

print(out.shape)

CNN으로 MNIST 분류하기

더보기

결과

결과

결과

결과

결과

결과

# 필요한 모듈 import

import torchvision.datasets as datasets

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

from torch.utils.data import DataLoader# device 확인

device = "cuda" if torch.cuda.is_available() else "cpu"

print(device)

# train 데이터

train_data = datasets.MNIST(

root = "data",

train = True,

transform = transforms.ToTensor(),

download = True

)# test 데이터

test_data = datasets.MNIST(

root = "data",

train = False,

transform = transforms.ToTensor(),

download = True

)loader = DataLoader(

dataset = train_data,

batch_size = 64,

shuffle = True

)

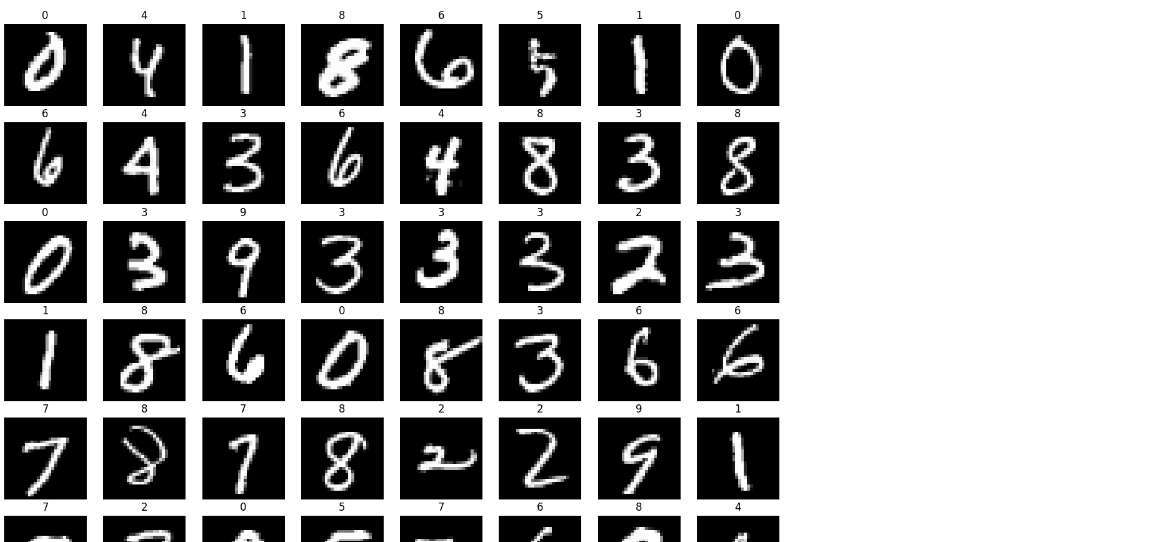

imgs, labels = next(iter(loader))

fig, axes = plt.subplots(8, 8, figsize=(16, 16))

for ax, img, label in zip(axes.flatten(), imgs, labels):

ax.imshow(img.reshape((28, 28)), cmap="gray")

ax.set_title(label.item())

ax.axis("off")

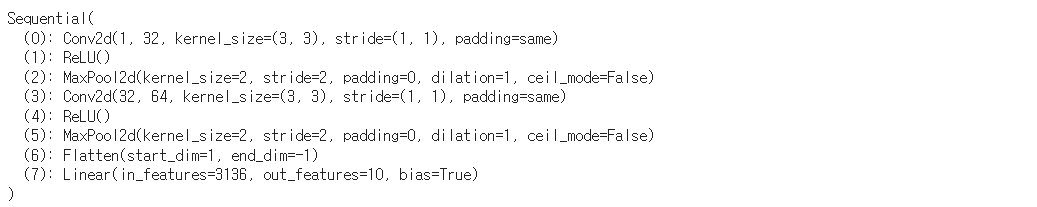

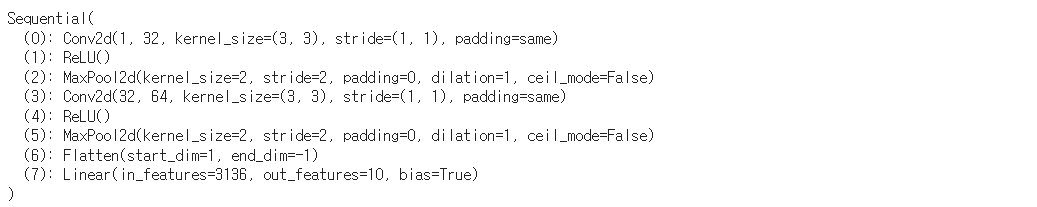

model = nn.Sequential(

nn.Conv2d(1, 32, kernel_size=3, padding="same"),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(32, 64, kernel_size=3, padding="same"),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2),

nn.Flatten(),

nn.Linear(64*7*7, 10)

).to(device)

print(model)

optimizer = optim.Adam(model.parameters(), lr=0.001)

epochs = 10

for epoch in range(epochs+2):

sum_losses = 0

sum_accs = 0

for x_batch, y_batch in loader:

x_batch = x_batch.to(device)

y_batch = y_batch.to(device)

y_pred = model(x_batch)

loss = nn.CrossEntropyLoss()(y_pred, y_batch)

optimizer.zero_grad()

loss.backward()

optimizer.step()

sum_losses = sum_losses + loss

y_prob = nn.Softmax(1)(y_pred)

y_pred_index = torch.argmax(y_prob, axis=1)

acc = (y_batch == y_pred_index).float().sum() / len(y_batch) * 100

sum_accs = sum_accs + acc

avg_loss = sum_losses / len(loader)

avg_acc = sum_accs / len(loader)

print(f"Epoch: {epoch:4d}/{epochs} Loss: {loss:.6f} Accuracy: {avg_acc:.2f}%")

test_loader = DataLoader(

dataset = test_data,

batch_size=64,

shuffle = True

)

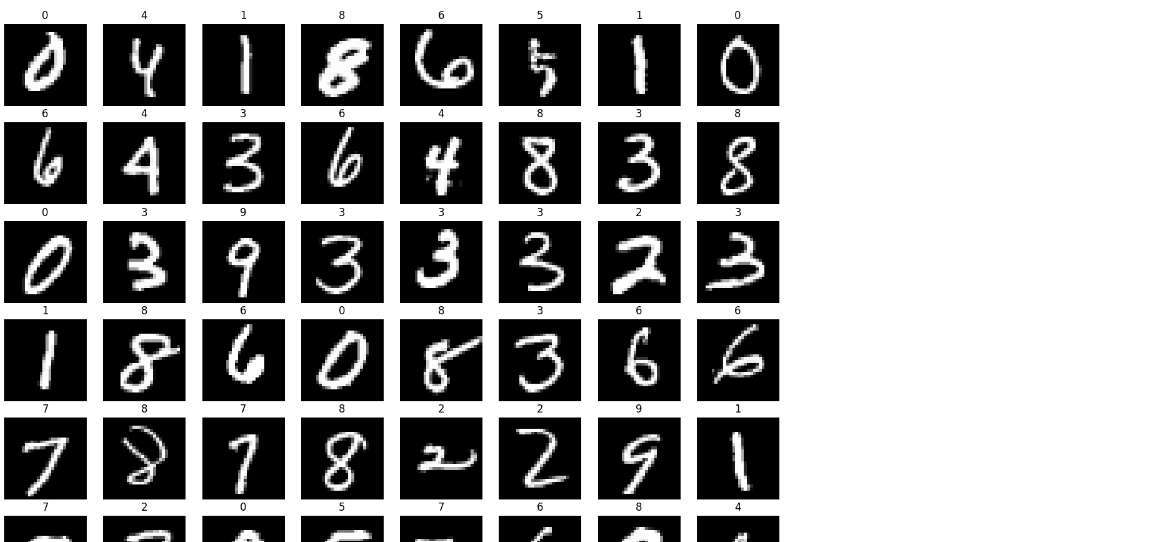

imgs, labels = next(iter(test_loader))

fig, axes = plt.subplots(8, 8, figsize=(16, 16))

for ax, img, label in zip(axes.flatten(), imgs, labels):

ax.imshow(img.reshape((28, 28)), cmap="gray")

ax.set_title(label.item())

ax.axis("off")

# eval 모델을 테스트 모드로 전환

# gradient를 작동시키지 않음

model.eval()

sum_accs = 0

for x_batch, y_batch in test_loader:

x_batch = x_batch.to(device)

y_batch = y_batch.to(device)

y_pred = model(x_batch)

y_prob = nn.Softmax(1)(y_pred)

y_pred_index = torch.argmax(y_prob, axis=1)

acc = (y_batch == y_pred_index).float().sum() / len(y_batch) * 100

sum_accs = sum_accs + acc

avg_acc = sum_accs / len(test_loader)

print(f"테스트 정확도는 {avg_acc:.2f}% 입니다.")

'Python > 머신러닝, 딥러닝' 카테고리의 다른 글

| Python 딥러닝 포켓몬 분류 (2) | 2024.06.24 |

|---|---|

| Python 딥러닝 전이 학습 (0) | 2024.06.24 |

| Python 딥러닝 CNN(Convolutional Neural Networks) (0) | 2024.06.24 |

| Python 딥러닝 비선형 활성화 함수 (0) | 2024.06.24 |

| Python 딥러닝 (0) | 2024.06.24 |